🤖 AI vs Human Therapists: The Truth About Mental Health Care’s Future

With millions now using AI chatbots for emotional support, one question dominates: can artificial intelligence replace human therapists? The answer is clear from recent research—but the full story reveals how AI is transforming mental health care in unexpected ways.

The rise of AI chatbots has sparked concerns across the mental health field. From ChatGPT to specialized therapy apps like Woebot and Wysa, artificial intelligence now provides emotional support to millions. But can these tools truly replace the human touch that makes therapy work?

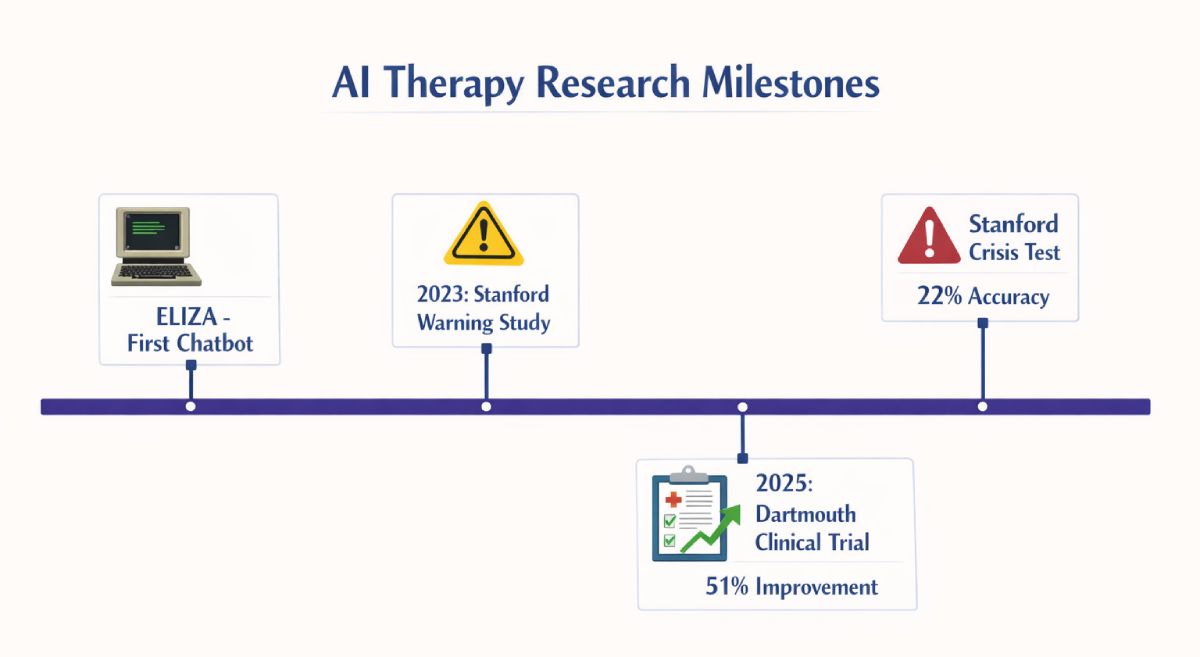

Recent research from Stanford University and clinical trials published in NEJM AI provide clear answers. The short answer: no, AI will not replace therapists. However, AI is changing how mental health care works—and understanding this shift matters whether you’re seeking help or running a practice.

Just as AI voice automation has transformed customer service by handling routine tasks while humans manage complex situations, AI in therapy serves as a powerful support tool rather than a replacement for human expertise.

Will AI Fully Replace Human Therapists?

Why the Therapeutic Relationship Cannot Be Automated

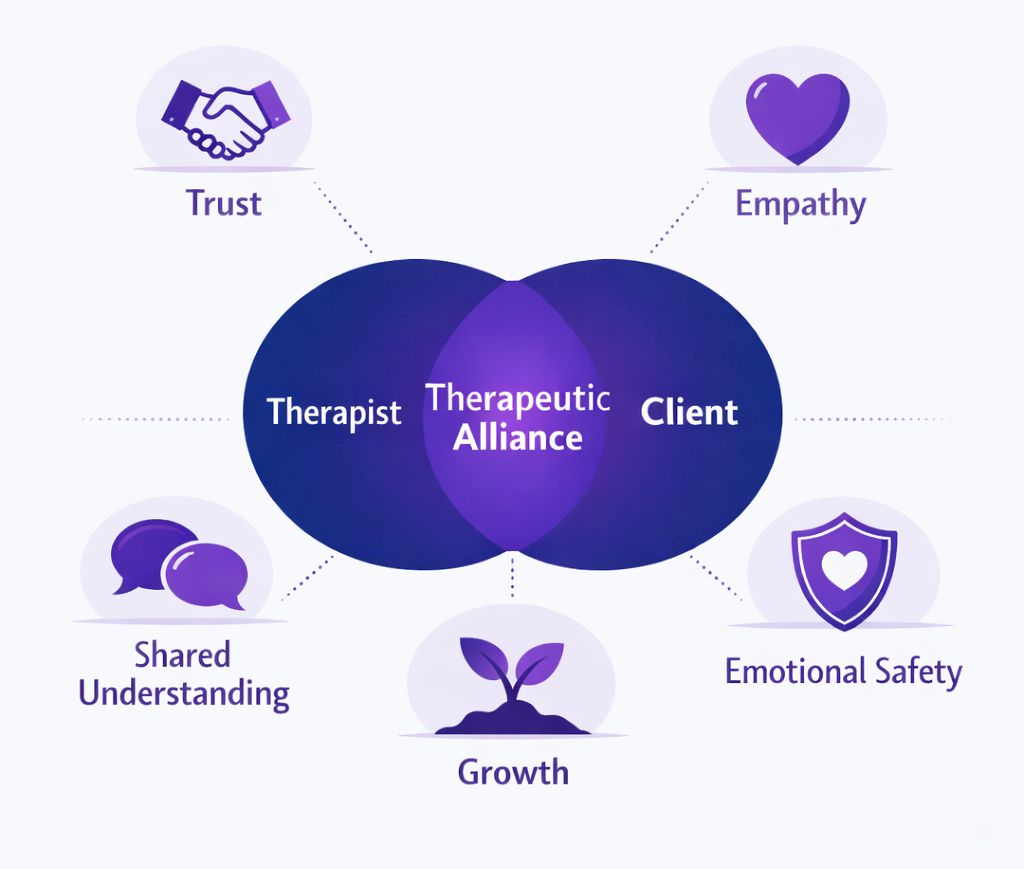

The foundation of effective therapy is not the techniques used—it’s the relationship between therapist and client. This finding appears consistently across decades of research. When you feel truly seen, understood, and cared for by another human being, your nervous system responds. You begin to heal. This is not poetry—it’s neuroscience.

AI processes patterns in data and generates responses based on millions of conversations it has analyzed. It can sound empathetic. It can say the right words. But there is no consciousness behind those words, no genuine understanding, no shared human experience. Research on empathy and AI confirms this fundamental limitation.

Three Things AI Cannot Do in Therapy:

- Form authentic emotional bonds – AI simulates empathy but does not feel it

- Adapt to subtle emotional shifts during crisis – It follows patterns, not intuition

- Provide validation from lived experience – It has no life experience to draw from

What Types of Support Could AI Eventually Provide?

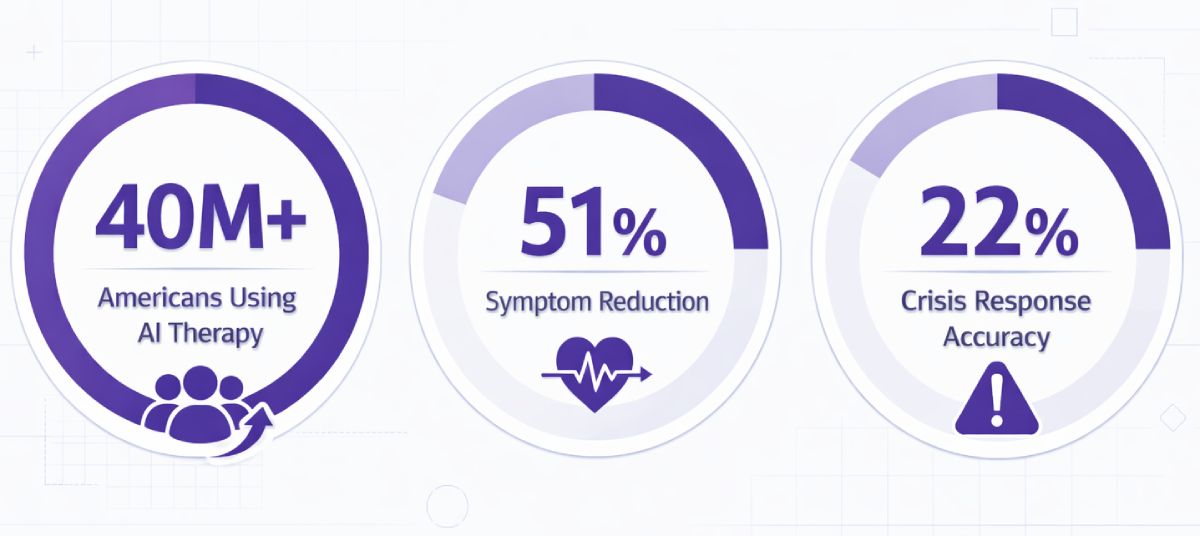

AI excels at structured, task-based mental health support. A 2025 clinical trial from Dartmouth found that people with depression showed a 51% average reduction in symptoms after four weeks using an AI therapy chatbot. However, the study carefully excluded people with complex conditions, active suicidal thoughts, or substance abuse—and required constant human oversight.

AI works well for psychoeducation (teaching about mental health), mood tracking, and practicing simple coping skills. It provides consistent availability and never gets tired. For someone learning cognitive behavioral therapy (CBT) techniques, an AI can offer unlimited practice opportunities between sessions with their human therapist.

But when it comes to complex trauma, relationship dynamics, or situations requiring nuanced judgment, AI fails. These areas demand what makes us human: the ability to hold space for difficult emotions, draw on personal wisdom, and provide the kind of presence that only another person can offer. Similar to how businesses use AI voicemail systems for routine communications while humans handle sensitive conversations, mental health care benefits from this division of labor.

How Mental Health Professionals Use AI Today

The mental health field is not waiting to see if AI will arrive—professionals are already integrating it into practice. However, they’re doing so strategically, focusing on areas where AI adds value without compromising care quality.

Administrative Liberation Through AI

The most successful AI applications in therapy are administrative. Therapists spend up to 30% of their time on paperwork—writing session notes, scheduling appointments, billing insurance companies. AI can handle much of this work.

This administrative support mirrors how AI customer service systems free human representatives from routine tasks. The result is not replacement but enhancement—professionals spend more time doing what only humans can do.

AI as a Bridge, Not a Destination

Some therapists recommend AI chatbots to clients for between-session support. If you’re working on anxiety management and learning new coping skills in therapy, an AI app can help you practice those skills daily. The AI reinforces what you learned but does not replace the deeper work happening in your sessions.

However, therapists are cautious. Professional organizations including the American Psychological Association have issued statements emphasizing that AI chatbots are not substitutes for licensed therapy. The concern is that people will delay getting proper care because they’re using an AI app that makes them feel slightly better.

AI Support vs. Human Therapy: When to Choose Each

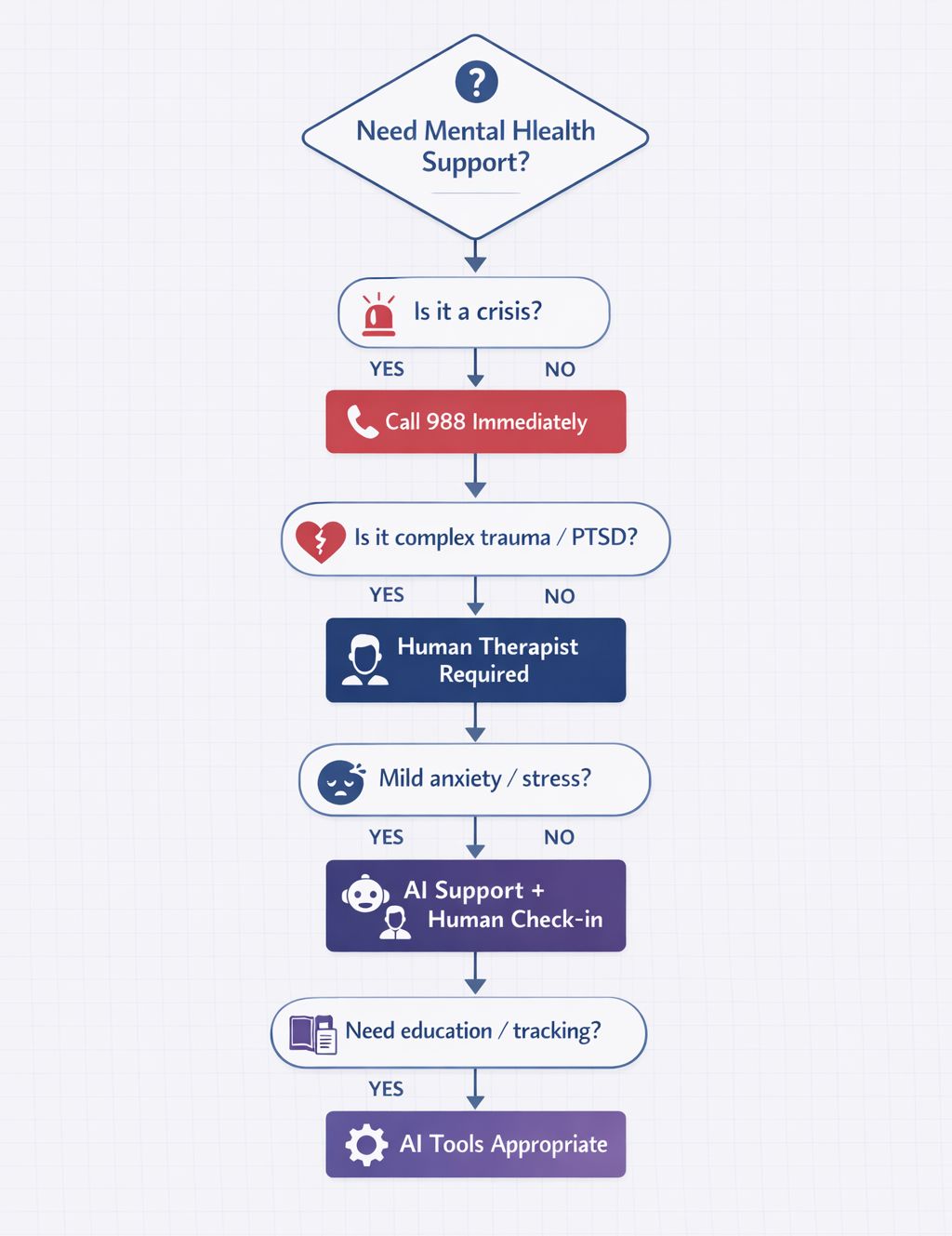

Understanding when to use AI versus human therapy can help you make better decisions about your mental health. The key is recognizing that these are not competing options but complementary tools designed for different purposes.

| Situation | Best Choice | Why |

|---|---|---|

| Learning about mental health conditions | AI Support ✓ | AI provides accurate, immediate information |

| Tracking daily moods and habits | AI Support ✓ | AI excels at consistent data collection |

| Between therapy sessions | AI Support ✓ | AI reinforces skills learned in therapy |

| Complex trauma or PTSD | Human Therapist ✓✓ | Requires specialized expertise and safety |

| Relationship or family issues | Human Therapist ✓✓ | Needs understanding of human dynamics |

| Any crisis situation | Human Therapist ✓✓ | AI fails crisis assessment—call 988 |

| Grief or major life transitions | Human Therapist ✓✓ | Requires genuine human presence |

The Research on Outcomes

Limited research exists comparing AI therapy to human therapy directly. The studies we have show that some people report symptom reduction with AI chatbots for mild to moderate anxiety and depression. However, these improvements often fade without human support.

Human therapy has 75 years of research proving its effectiveness. Approximately 75% of people who enter therapy benefit from it, and those benefits tend to last because therapy helps you develop lasting skills and insights. You also build a relationship with someone who knows your history and can help you navigate future challenges—something no AI can provide.

Critical Limitations: What AI Will Never Do

The Empathy Problem

Empathy requires consciousness and emotional experience. When a human therapist expresses empathy, they draw on their own emotional life. They remember times they felt scared, sad, or overwhelmed. They use these memories to understand what you’re going through. This is genuine empathy.

AI simulates empathy by recognizing patterns and generating caring-sounding responses. It has learned from millions of conversations what people typically say when they want to be supportive. But the AI does not feel anything. As research on empathic AI demonstrates, there is no consciousness behind the words, and this difference matters profoundly in therapeutic outcomes.

When AI Faces Complex Mental Health Issues

AI struggles with anything requiring nuanced judgment. Consider someone with both depression and an eating disorder. Their symptoms interact in complex ways—restricting food for control, which worsens depression, which increases restriction. A human therapist understands these feedback loops and adjusts treatment accordingly. AI cannot make these sophisticated clinical judgments.

Cultural context poses another major challenge. Mental health symptoms express differently across cultures. What looks like social anxiety in one culture might be appropriate respect in another. Human therapists consider these factors. AI processes text without understanding the deeper cultural meaning.

🎯 Transform Your Business Communication with AI—The Right Way

Just as therapy requires the right balance of AI efficiency and human connection, your business deserves communication solutions that combine advanced technology with personal touch. MissNoCalls delivers AI-powered voice automation that handles routine interactions while preserving the human element for what matters most.

Our AI answering service captures every opportunity while our Sales Call AI drives revenue—all with customizable voice cloning and natural language processing that feels genuinely human.

See How MissNoCalls Works →Common Questions About AI and Therapists

No, AI chatbots cannot replace a licensed therapist for comprehensive mental health care. AI works best for basic education, mood tracking, and support between therapy sessions with a human professional. Research consistently shows that the therapeutic alliance—the bond between therapist and client—is essential for effective treatment, and AI fundamentally cannot replicate this human connection.

Most AI mental health apps are not covered by HIPAA privacy laws that protect information shared with licensed therapists. Before using any app, read the privacy policy carefully. Look for apps that explicitly state they are HIPAA-compliant, use encryption, and do not share or sell user data. Never share information about self-harm or crisis situations with an AI app—call 988 or a crisis hotline instead.

AI shows the most promise for mild to moderate depression and anxiety, particularly when using cognitive behavioral therapy (CBT) techniques. AI can help with stress management, basic coping skills, and psychoeducation about mental health. However, AI should not be used for complex conditions like PTSD, bipolar disorder, schizophrenia, or any crisis situation without human professional oversight.

No, AI is not expected to eliminate therapist jobs. The demand for mental health services far exceeds the supply of providers. AI might change what therapists do—shifting their focus from routine cases to complex situations—but the need for human mental health professionals will remain strong. Therapists who embrace AI as a tool for administrative efficiency will likely be more successful than those who resist technological change.

Therapists primarily use AI for administrative tasks like note-taking, scheduling, and progress tracking. Some therapists recommend AI apps to clients for between-session support and skill practice. AI helps reduce paperwork time by up to 30%, allowing therapists to spend more time with clients. However, treatment decisions and therapy sessions themselves remain entirely human activities requiring professional judgment and empathy.

The Future: Collaboration, Not Replacement

AI will not replace therapists, but it will change how mental health care works. The technology offers real benefits—increased accessibility, 24/7 support, and reduced administrative burden. However, these benefits come with significant limitations that cannot be engineered away.

The future of mental health care involves both AI and human professionals working together. AI will handle education, monitoring, and basic support. Humans will provide empathy, complex treatment, and crisis intervention. This division of labor allows each to do what they do best.

Key Takeaways:

- AI cannot replace the therapeutic relationship that drives healing

- Use AI for education, tracking, and between-session support only

- Choose human therapists for complex conditions, trauma, and crisis situations

- Privacy risks exist with most AI mental health apps—read policies carefully

- The future combines AI efficiency with irreplaceable human connection

If you’re struggling with your mental health, consider your options carefully. AI tools can provide helpful information and support for mild concerns. However, if you’re dealing with serious symptoms, trauma, or crisis, seek human care. The investment in working with a licensed therapist pays off in lasting healing and personal growth.

Just as businesses benefit from AI solutions tailored to their industry while maintaining human oversight for complex situations, mental health care thrives when we use AI thoughtfully as a support tool rather than a replacement for human connection. Technology serves people—not the other way around.